Guide to upgrade an EKS cluster from 1.29 to 1.30 using Terraform

Posted By

Ramesh Tathe

It's time to upgrade your EKS clusters! Following up on my previous blog about upgrading an EKS cluster from 1.28 to 1.29, this highly anticipated Kubernetes 1.30 release, nicknamed Uwernetes, brings new features and improvements. If you're worried about navigating the upgrade process alone, don't worry. This blog will guide you through a smooth and efficient upgrade using Terraform, ensuring your workloads transition seamlessly into the Uwernetes era.

EKS version 1.30 changes:

- Managed node group automatically used default Amazon Linux 2023 OS.

- EKS added a new label to worker nodes as topology.k8s.aws/zone-id to specify zone id for resource.

- EKS will not include default annotation on the gp2 StorageClass.

What are the changes in Kubernetes 1.30 release?

You can find a complete list of changes and updates in Kubernetes version 1.30 here. These are the updates that caught my eye.

- Robust VolumeManager reconstruction after kubelet restart (SIG Storage): This update to the volume manager allows the kubelet to gather more detailed information about how volumes are currently mounted during startup. This improved data helps ensure volumes are cleaned up reliably after a Kubelet restart or machine reboot. There are no changes for users or cluster administrators. They used a feature process and feature gate NewVolumeManagerReconstruction to revert to the old behavior, if necessary, temporarily. Now that the feature is stable, the feature gate is locked and cannot be disabled.

- Prevent unauthorized volume mode conversion during volume restore (SIG Storage): The control plane strictly prevents unauthorized changes to volume modes when restoring snapshots to PersistentVolume. To allow such changes, cluster administrators must provide specific permissions to the relevant identities (like ServiceAccounts used for storage integration).

- Pod Scheduling Readiness (SIG Scheduling): Kubernetes avoids scheduling Pods until the required resources are provisioned in the cluster. This control enables the implementation of custom rules, such as quotas and security measures, to regulate Pod scheduling.

- Min domains in PodTopologySpread (SIG Scheduling): The minDomains parameter for PodTopologySpread constraints is now stable. This allows you to specify the minimum number of domains for Pod placement. This feature is designed to work with Cluster Autoscaler.

This release includes a total of 17 enhancements promoted to Stable:

- Container Resource based Pod Autoscaling

- Remove transient node predicates from KCCM's service controller

- Go workspaces for k/k

- Reduction of Secret-based Service Account Tokens

- CEL for Admission Control

- CEL-based admission webhook match conditions

- Pod Scheduling Readiness

- Min domains in PodTopologySpread

- Prevent unauthorised volume mode conversion during volume restore

- API Server Tracing

- Cloud Dual-Stack --node-ip Handling

- AppArmor support

- Robust VolumeManager reconstruction after kubelet restart

- kubectl delete: Add interactive(-i) flag

- Metric cardinality enforcement

- Field status.hostIPs added for Pod

- Aggregated Discovery

No deprecated API versions in Kubernetes 1.30 release

Read how to upgrade your EKS cluster from 1.30 to 1.31

Steps to upgrade EKS with Terraform

First, deploy the EKS cluster in AWS Cloud with 1.29 version by Terraform. Here's the screenshot of the cluster.

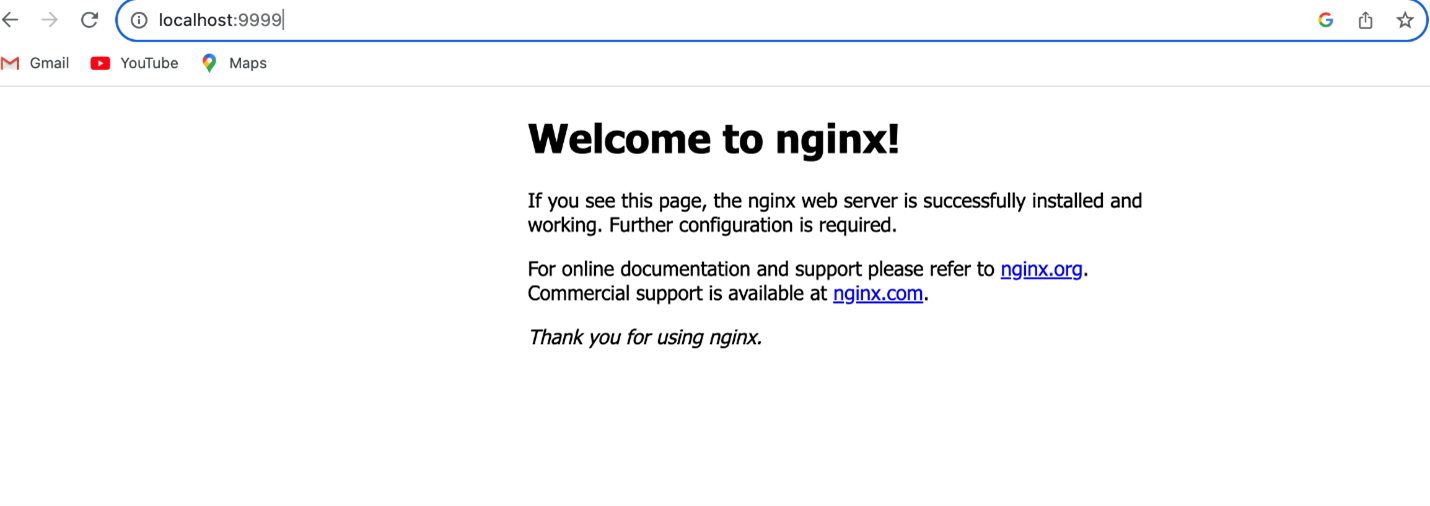

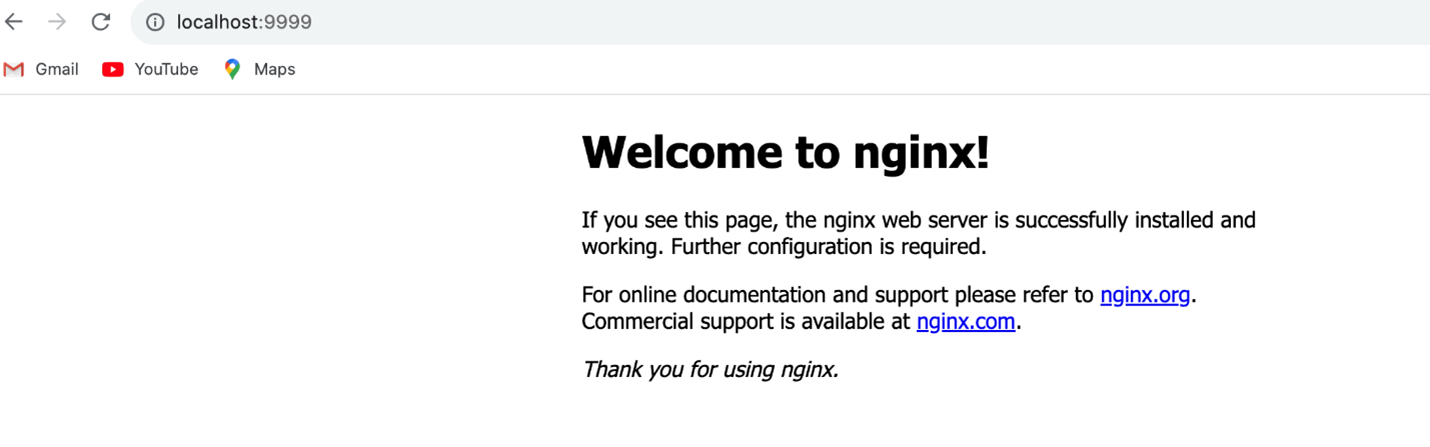

I deployed the nginx application running in the cluster and verified the nginx app by port forwarding to the 9999 port.

kubectl get po -n nginx NAME READY STATUS RESTARTS AGE nginx-deployment-848dd6cfb5-nnxh4 1/1 Running 0 13s kubectl port-forward -n nginx nginx-deployment-848dd6cfb5-nnxh4 9999:80 Forwarding from 127.0.0.1:9999 -> 80 Forwarding from [::1]:9999 -> 80 Handling connection for 9999 Handling connection for 9999

Access the nginx application here: http://localhost:9999

What are the Prerequisites to upgrade from EKS cluster 1.29 – 1.30

Before upgrading the EKS cluster to v1.30, we need to update the IAM policy of the EKS cluster IAM role with action ec2:DescribeAvailabilityZones

Upgrade EKS cluster

Updates Terraform script to upgrade EKS cluster to 1.30 version.

terraform {

backend "s3" {

encrypt = true

bucket = "terraform-state-bucket"

key = "infra"

region = "ap-south-1"

}

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = "ap-south-1"

}

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 20.0"

cluster_name = "eks-cluster"

cluster_version = "1.30"

cluster_endpoint_public_access = true

cluster_addons = {

coredns = {

resolve_conflicts = "OVERWRITE"

version = "v1.11.1-eksbuild.9"

}

kube-proxy = {

resolve_conflicts = "OVERWRITE"

version = "1.30.0-eksbuild.3"

}

vpc-cni = {

resolve_conflicts = "OVERWRITE"

version = "v1.18.1-eksbuild.3"

}

aws-ebs-csi-driver = {

resolve_conflicts = "OVERWRITE"

version = "v1.31.1-eksbuild.1"

}

}

vpc_id = "*******"

subnet_ids = ["*******", " *******", "******* "]

control_plane_subnet_ids = ["*******", "*******", "*******"]

eks_managed_node_groups = {

group = {

ami_type = "AL2023_x86_64_STANDARD"

instance_types = ["t3a.medium"]

min_size = 1

max_size = 2

desired_size = 1

}

}

enable_cluster_creator_admin_permissions = true

tags = {

Environment = "dev"

Terraform = "true"

}

}

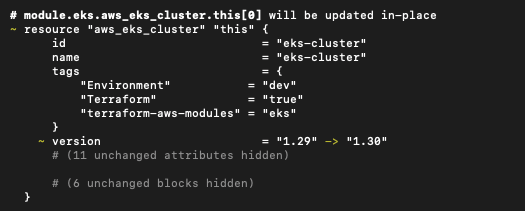

To see the changes after modifying the Terraform script, run Terraform plan.

terraform plan

To apply the changes to upgrade the EKS cluster to 1.30 version, run Terraform apply

terraform apply

Upgrading the cluster will take 8-10 minutes and 25-30 minutes to do the node group and AWS EKS add-ons. Now, let's verify the nginx application, which was already deployed. I verified the nginx application by port forwarding to 9999 port.

kubectl get po -n nginx NAME READY STATUS RESTARTS AGE nginx-deployment-848dd6cfb5-t8pjf 1/1 Running 0 7m45s kubectl port-forward -n nginx nginx-deployment-848dd6cfb5-t8pjf 9999:80 Forwarding from 127.0.0.1:9999 -> 80 Forwarding from [::1]:9999 -> 80

From above, the nginx app is redeployed after the node group gets upgraded to 1.30 version. Now let's access the nginx application by http://localhost:9999

Verify all other components after upgrading the EKS cluster to check whether they could include:

- Load Balancer Controller

- calico-node

- Cluster Autoscaler or Karpenter

- External Secrets Operator

- Kube State Metrics

- Metrics Server

- csi-secrets-store

- Keda (event driven autoscaler)

Conclusion

It's now much quicker to upgrade an EKS cluster, taking only 8 minutes to upgrade the control plane. I utilized Terraform to seamlessly upgrade both the EKS cluster and node group to version 1.30. This made the process significantly easier. Fortunately, there were no major issues during the upgrade, and all workloads ran without any problems.

My next blog will talk about migrating from EKS from 1.30 to 1.31. Stay tuned!

Related Blogs