Guide to upgrade an EKS cluster from 1.28 to 1.29 using Terraform

Posted By

Ramesh Tathe

Kubernetes 1.28 introduced several enhancements, including improved network policy performance, extended support for Windows workloads, and advancements in CSI volume management. However, as with all software, it's essential to stay updated for the latest features, bug fixes, and security patches. Kubernetes 1.29 builds upon its predecessor, offering further optimizations, new capabilities, and addressing potential vulnerabilities. The 1.29 release is called Mandala - a symbol of the universe in its perfection. This guide will walk you through the process of upgrading your EKS cluster from 1.28 to 1.29 using Terraform, ensuring a smooth transition while leveraging the benefits of the newer version.

What are the changes in Kubernetes 1.29 release?

The complete list of changes and upgrades are here. I will briefly talk about the updates that caught my attention:

- Remove transient node predicates from KCCM's service controller: The service controller will no longer synchronize the load balancer node set. To introduce a more efficient approach, a new feature gate named StableLoadBalancerNodeSet will be introduced.

- Reserve NodePort ranges for dynamic and static allocation: The NodePort service can expose a service outside the cluster and allow external applications access to a set of pods. NodePort has several ports that are widespread in the cluster and allow to load-balance traffic from the external. The port number can be assigned either dynamically, where the cluster will pick one within the configured service NodePort range or statically, where the user will set one port within the configured service NodePort range.

- Priority and Fairness for API Server Requests: This is an alternative to the existing max-in-flight request handler in the apiserver that makes more distinctions among requests and provides prioritization and fairness among the categories of requests, in a user-configurable way.

- KMS v2 Improvements: This will enable partially automated key rotation for the latest key without API server restarts. It will improve the KMS plugin health check reliability and also improve observability of envelop operations between kube-apiserver, KMS plugins and KMS.

- Support paged LIST queries from the Kubernetes API: This will allow API consumers - especially those that must retrieve large sets of data - to retrieve results in pages to reduce the memory and size impact of those very large queries.

- ReadWriteOncePod PersistentVolume Access Mode: Kubernetes offers a new PersistentVolume access mode called ReadWriteOncePod. This mode restricts access to a PersistentVolume to a single pod on a single node. This differs from the existing ReadWriteOnce (RWO) access mode, which allows multiple pods on the same node to access the volume simultaneously, but still confines access to that specific node.

- Kubernetes Component Health SLIs: It intends to allow us to emit SLI data in a structured and consistent way, so that monitoring agents can consume this data at higher scrape intervals and create SLOs (service level objectives) and alerts off these SLIs (service level indicators).

- Introduce nodeExpandSecret in CSI PV source: This proposes a way to add NodeExpandSecret to the CSI persistent volume source and thus enabling the csi client to send it out as part of the nodeExpandVolume request to the csi drivers for making use of it in the various Node Operations.

- Track Ready Pods in Job status: The Job status includes an "active" field that indicates the number of Job Pods currently in the Running or Pending state. In this release it adds a field ready that counts the number of Job Pods that have a Ready condition.

- Kubelet Resource Metrics Endpoint: The Kubelet Resource Metrics Endpoint is a new endpoint exposed by the kubelet to provide metrics required by the cluster-level Resource Metrics API. This endpoint uses the Prometheus text format and offers the essential metrics needed for the Resource Metrics API.

Deprecated API versions in Kubernetes 1.29 release:

1. Flow control resources

The flowcontrol.apiserver.k8s.io/v1beta2 API version of FlowSchema and PriorityLevelConfiguration is deprecated.

- Migrate manifests and API clients to use the flowcontrol.apiserver.k8s.io/v1beta2 API version to flowcontrol.apiserver.k8s.io/v1

- Changes in flowcontrol.apiserver.k8s.io/v1:

PriorityLevelConfiguration spec.limited.assuredConcurrencyShares field is renamed to spec.limited.nominalConcurrencyShares and defaults to 30 when unspecified.

Read how to upgrade your EKS cluster from 1.29 to 1.30.

Upgrade EKS with terraform:

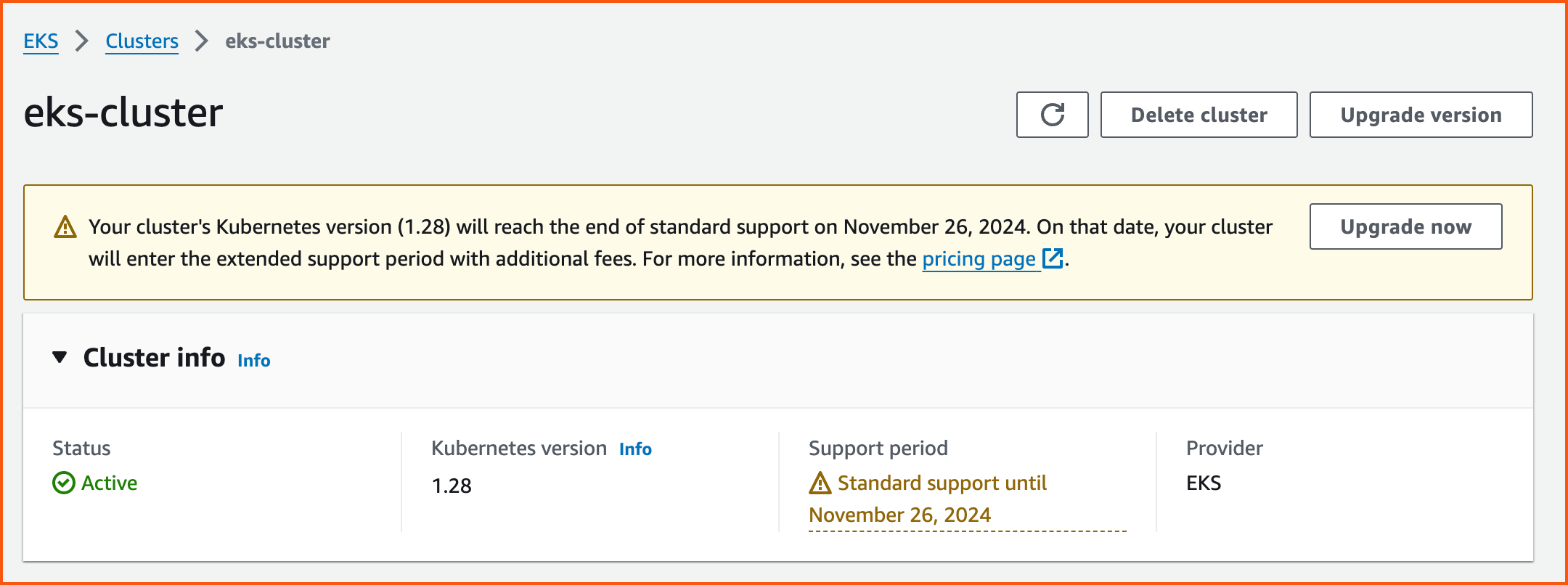

First, deploy the EKS cluster to the AWS cloud with 1.28 version using Terraform. Here is the screenshot of the cluster:

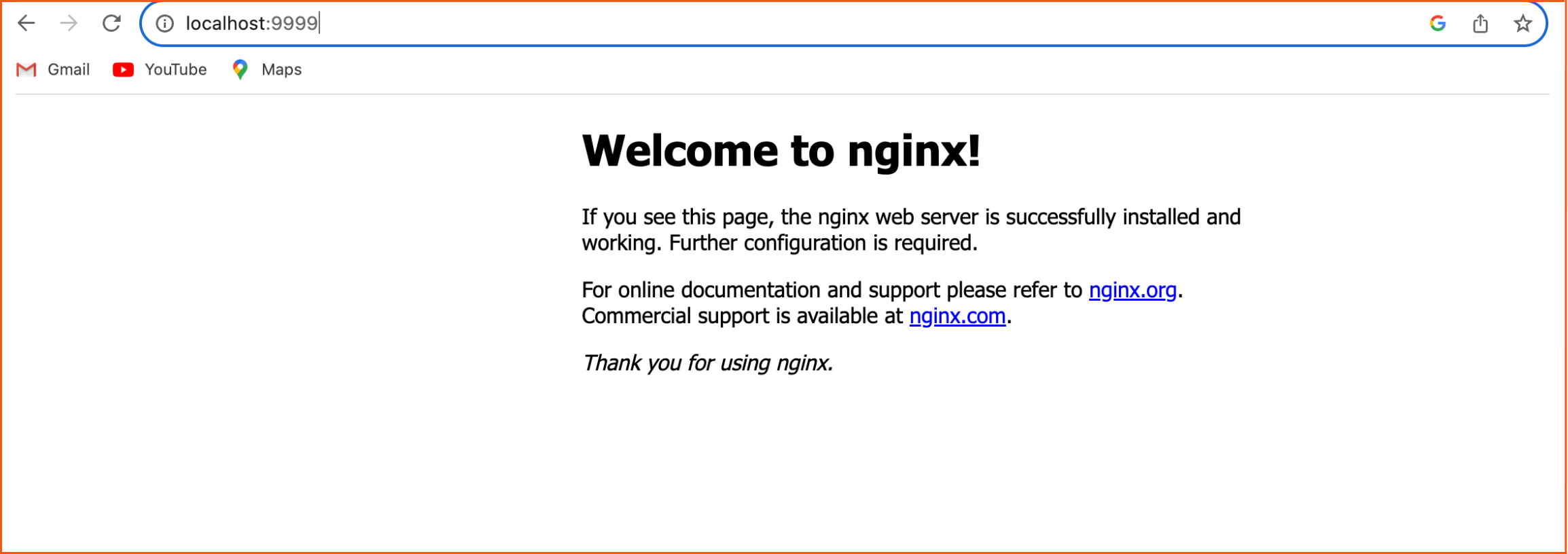

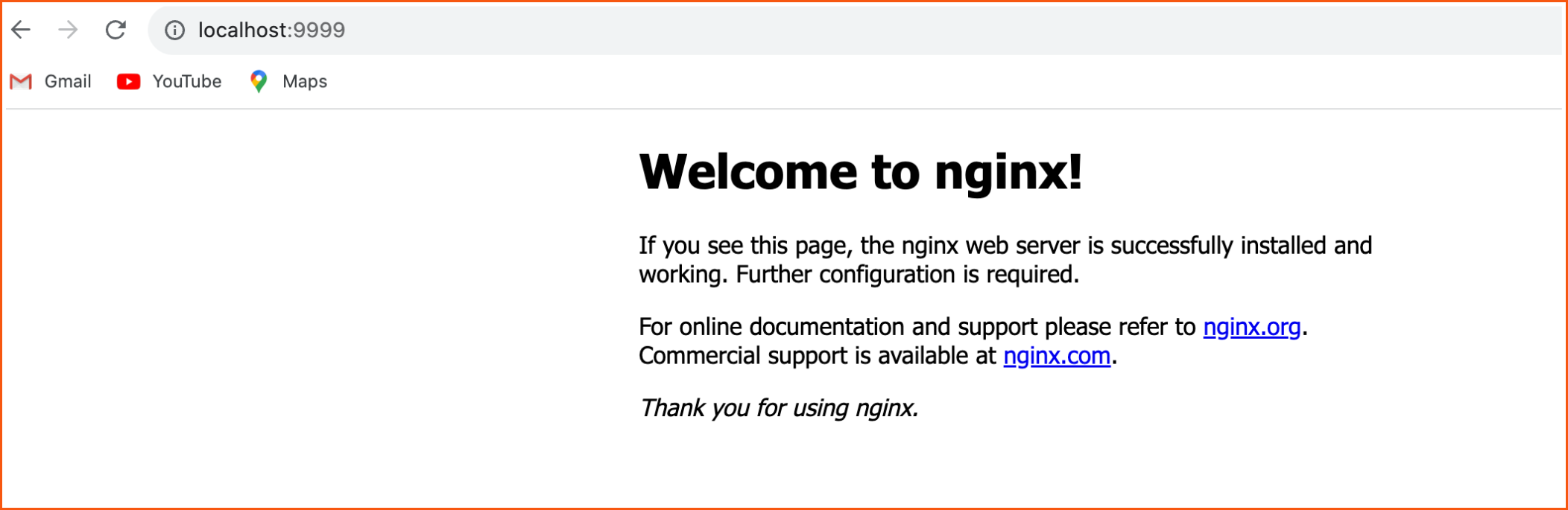

Deploy the nginx application in the cluster and have verified the nginx app by port forwarding to the 9999 port.

kubectl get po -n nginx NAME READY STATUS RESTARTS AGE nginx-deployment-848dd6cfb5-bbkfs 1/1 Running 0 16s kubectl port-forward -n nginx nginx-deployment-848dd6cfb5-bbkfs 9999:80 Forwarding from 127.0.0.1:9999 -> 80 Forwarding from [::1]:9999 -> 80 Handling connection for 9999 Handling connection for 9999

You can access nginx application here: http://localhost:9999

Prerequisites to upgrade:

Before upgrading to Kubernetes v1.29 in Amazon EKS, update the API version of FlowSchema and PriorityLevelConfiguration from flowcontrol.apiserver.k8s.io/v1beta2 to flowcontrol.apiserver.k8s.io/v1

Upgrade EKS cluster:

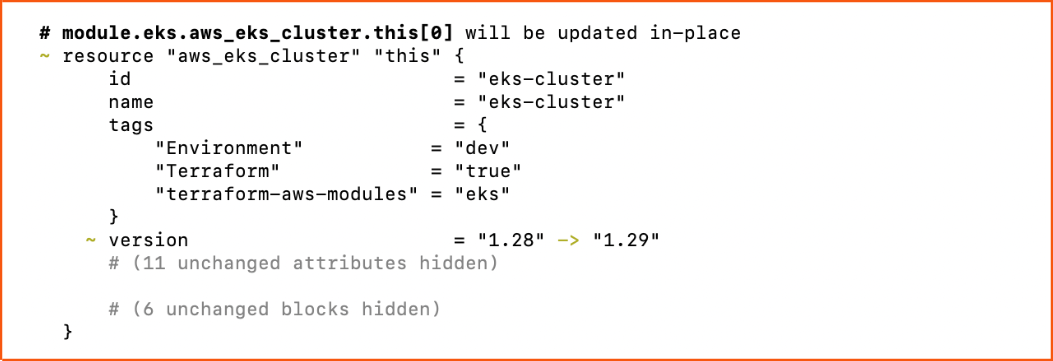

Update the Terraform script to upgrade the EKS cluster to 1.29 version.

terraform {

backend "s3" {

encrypt = true

bucket = "terraform-state-bucket"

key = "infra"

region = "ap-south-1"

}

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = "ap-south-1"

}

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 20.0"

cluster_name = "eks-cluster"

cluster_version = "1.29"

cluster_endpoint_public_access = true

cluster_addons = {

coredns = {

resolve_conflicts = "OVERWRITE"

version = "v1.11.1-eksbuild.4"

}

kube-proxy = {

resolve_conflicts = "OVERWRITE"

version = "v1.29.0-eksbuild.1"

}

vpc-cni = {

resolve_conflicts = "OVERWRITE"

version = "v1.16.0-eksbuild.1"

}

aws-ebs-csi-driver = {

resolve_conflicts = "OVERWRITE"

version = "v1.26.1-eksbuild.1"

}

}

vpc_id = "XXXXXXX"

subnet_ids = ["XXXXXXXXXXX", " XXXXXXXXXXX", " XXXXXXXXXXX "]

control_plane_subnet_ids = ["XXXXXXXXXXX", " XXXXXXXXXXX", " XXXXXXXXXXX "]

eks_managed_node_groups = {

group = {

ami_type = "AL2023_x86_64_STANDARD"

instance_types = ["t3a.medium"]

min_size = 1

max_size = 2

desired_size = 1

}

}

enable_cluster_creator_admin_permissions = true

tags = {

Environment = "dev"

Terraform = "true"

}

}

To see the changes after modifying terraform script, run Terraform plan

terraform plan

To apply the changes to upgrade EKS cluster to 1.29 version, run Terraform apply with auto approve

terraform apply –auto-approve

It will take 8-10 minutes to upgrade the cluster and 25-30 minutes to node group and AWS EKS addons. Now verify the nginx application which was already deployed. Forward it to the 9999 port for verification.

kubectl get po -n nginx NAME READY STATUS RESTARTS AGE nginx-deployment-848dd6cfb5-lznwd 1/1 Running 0 17m kubectl port-forward -n nginx nginx-deployment-848dd6cfb5-lznwd 9999:80 Forwarding from 127.0.0.1:9999 -> 80 Forwarding from [::1]:9999 -> 80 Handling connection for 9999 Handling connection for 9999

As you can see above, the nginx app is redeployed after node group is upgraded to 1.29 version. Now access the nginx application by http://localhost:9999

Conclusion

Upgrading our EKS cluster from 1.28 to 1.29 using Terraform proved to be remarkably efficient. The entire control plane upgrade was completed in just 8 minutes, significantly faster than previous upgrade experiences. The process was smooth, with no notable disruptions to our workloads. This successful upgrade highlights the streamlined approach and efficiency of using Terraform for EKS cluster management. The next blog will discuss the steps to upgrade your EKS cluster from 1.29 to 1.30 using Terraform. Stay tuned!