It's time to upgrade your EKS clusters! Following up on my previous blog about upgrading an EKS cluster from 1.29 to 1.30, this highly anticipated Kubernetes 1.31 release, nicknamed Elli, brings new features and improvements. If you're worried about navigating the upgrade process alone, don't worry. This blog will guide you through a smooth and efficient upgrade using Terraform, ensuring your workloads transition seamlessly into the Elli era.

What are the changes in Kubernetes 1.31 release?

You can find a complete list of changes and updates in Kubernetes version 1.31 here. These are the updates that caught my eye.

AppArmor support is now stable: Protect your containers using AppArmor by setting the appArmorProfile.type field in the container's securityContext. Note that before Kubernetes v1.30, AppArmor was controlled via annotations; starting in v1.30 it is controlled using fields. It is recommended that you should migrate away from using annotations and start using the appArmorProfile.type field.

Improved ingress connectivity reliability for kube-proxy: Kube-proxy improved ingress connectivity reliability. One of the common problems with load balancers in Kubernetes is the synchronization between the different components involved to avoid traffic drop. This feature implements a mechanism in kube-proxy for load balancers to do connection draining for terminating Nodes exposed by services of type: LoadBalancer and externalTrafficPolicy: Cluster and establish some best practices for cloud providers and Kubernetes load balancers implementations.

Persistent Volume last phase transition time: This feature adds a PersistentVolumeStatus field which holds a timestamp of when a PersistentVolume last transitioned to a different phase. With this feature enabled, every PersistentVolume object will have a new field .status.lastTransitionTime, that holds a timestamp of when the volume last transitioned its phase. This change is not immediate; the new field will be populated whenever a PersistentVolume is updated and first transitions between phases (Pending, Bound, or Released) after upgrading to Kubernetes v1.31. This allows you to measure time between when a PersistentVolume moves from Pending to Bound. This can be also useful for providing metrics and SLOs.

This release includes a total of 11 enhancements promoted to Stable:

- PersistentVolume last phase transition time

- Metric cardinality enforcement

- Kube-proxy improved ingress connectivity reliability

- Add CDI devices to device plugin API

- Move cgroup v1 support into maintenance mode

- AppArmor support

- PodHealthyPolicy for PodDisruptionBudget

- Retriable and non-retriable Pod failures for Jobs

- Elastic Indexed Jobs

- Allow StatefulSet to control start replica ordinal numbering

- Random Pod selection on ReplicaSet downscaling

Deprecation and Removal in versions in Kubernetes 1.30 release:

- Deprecation of status.nodeInfo.kubeProxyVersion field for Nodes: The .status.nodeInfo.kubeProxyVersion field of Nodes has been deprecated in Kubernetes v1.31, and will be removed in a later release. It's being deprecated because the value of this field wasn't (and isn't) accurate. This field is set by the kubelet, which does not have reliable information about the kube-proxy version or whether kube-proxy is running.

The DisableNodeKubeProxyVersion feature gate will be set to true in by default in v1.31 and the kubelet will no longer attempt to set the .status.kubeProxyVersion field for its associated Node. - Removal of kubelet --keep-terminated-pod-volumes command line flag: The kubelet flag --keep-terminated-pod-volumes, which was deprecated in 2017, has been removed as part of the v1.31 release.

Steps to upgrade EKS from 1.30 to 1.31

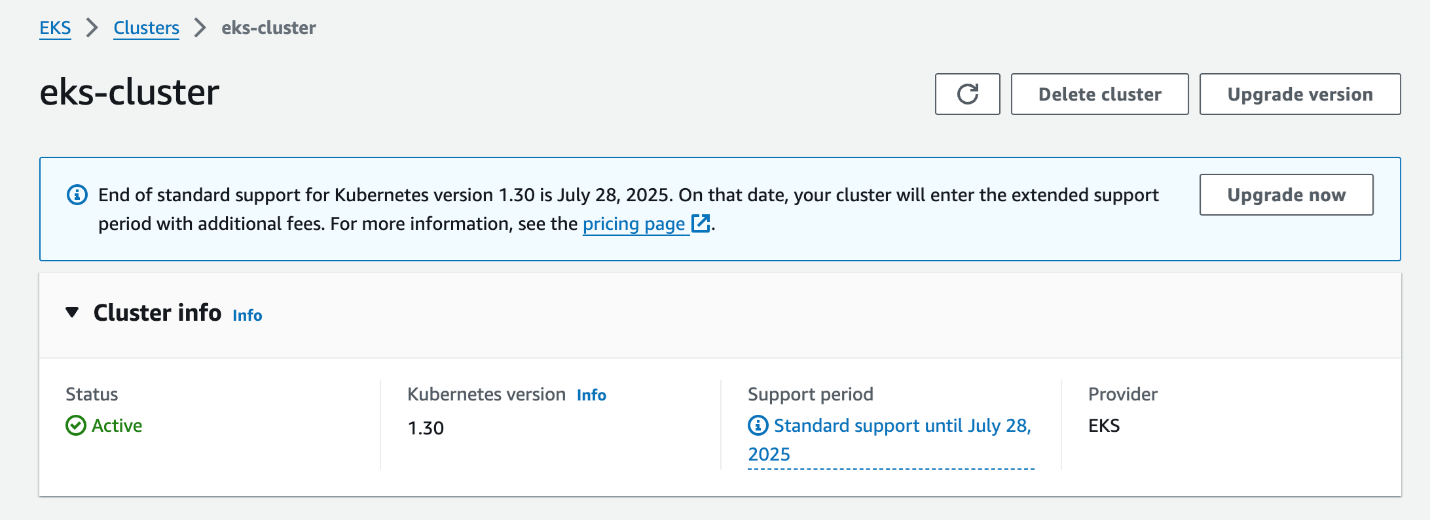

First, I deployed EKS cluster in AWS Cloud with 1.30 version by Terraform. Here's the screenshot of the cluster.

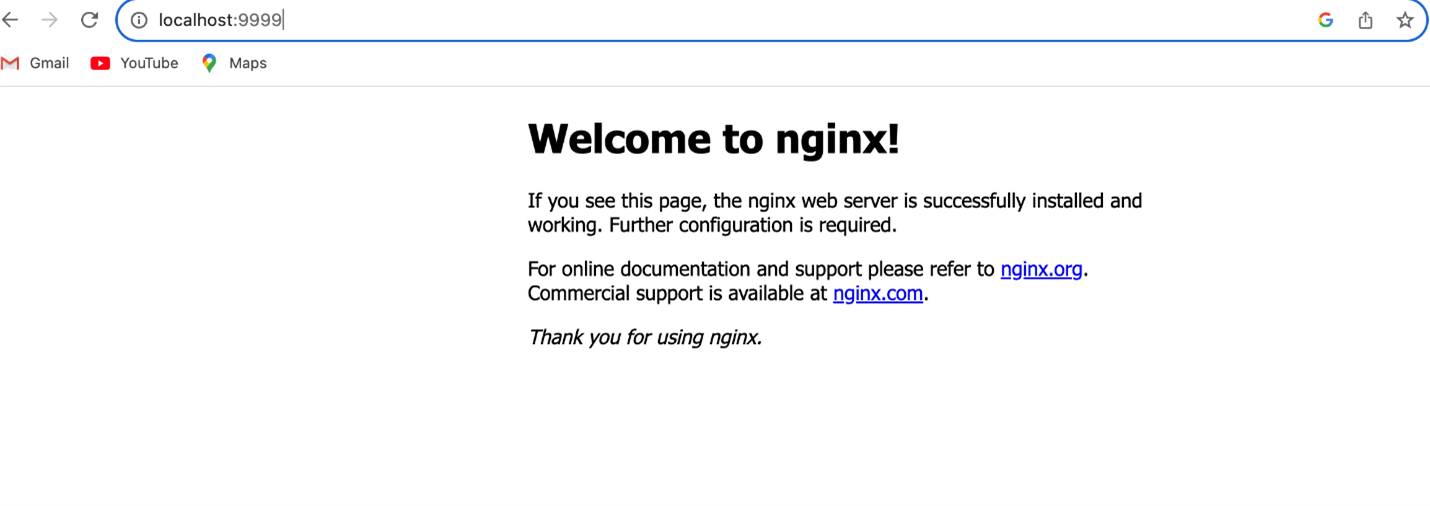

We deployed the NGINX application running in the cluster. I have verified the NGINX app by port forwarding to the 9999 port.

kubectl get po -n nginx NAME READY STATUS RESTARTS AGE nginx-deployment-595dff4fdb-dbqxm 1/1 Running 0 13s kubectl port-forward -n nginx nginx-deployment-595dff4fdb-dbqxm 9999:80 Forwarding from 127.0.0.1:9999 -> 80 Forwarding from [::1]:9999 -> 80 Handling connection for 9999 Handling connection for 9999

We can access the NGINX application here: http://localhost:9999

Prerequisites to upgrade from 1.30 – 1.31

Remove kubelet flag --keep-terminated-pod-volumes from node configuration before upgrade.

Upgrade EKS cluster:

Updates Terraform script to upgrade EKS cluster to 1.31 version.

terraform {

backend "s3" {

encrypt = true

bucket = "terraform-state-bucket"

key = "infra"

region = "ap-south-1"

}

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = "ap-south-1"

}

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 20.0"

cluster_name = "eks-cluster"

cluster_version = "1.31"

cluster_endpoint_public_access = true

cluster_addons = {

coredns = {

resolve_conflicts = "OVERWRITE"

version = " v1.11.3-eksbuild.1"

}

kube-proxy = {

resolve_conflicts = "OVERWRITE"

version = " v1.31.0-eksbuild.5"

}

vpc-cni = {

resolve_conflicts = "OVERWRITE"

version = " v1.18.5-eksbuild.1"

}

aws-ebs-csi-driver = {

resolve_conflicts = "OVERWRITE"

version = " v1.35.0-eksbuild.1"

}

}

vpc_id = "*******"

subnet_ids = ["*******", " *******", "******* "]

control_plane_subnet_ids = ["*******", "*******", "*******"]

eks_managed_node_groups = {

group = {

ami_type = "AL2023_x86_64_STANDARD"

instance_types = ["t3a.medium"]

min_size = 1

max_size = 2

desired_size = 1

}

}

enable_cluster_creator_admin_permissions = true

tags = {

Environment = "dev"

Terraform = "true"

}

}

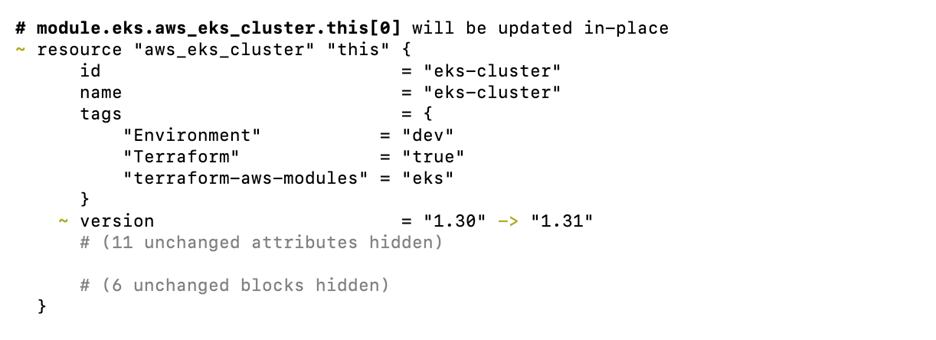

To see the changes after modifying the Terraform script, run Terraform plan.

terraform plan

To apply the changes to upgrade the EKS cluster to 1.31 version, run Terraform apply

terraform apply

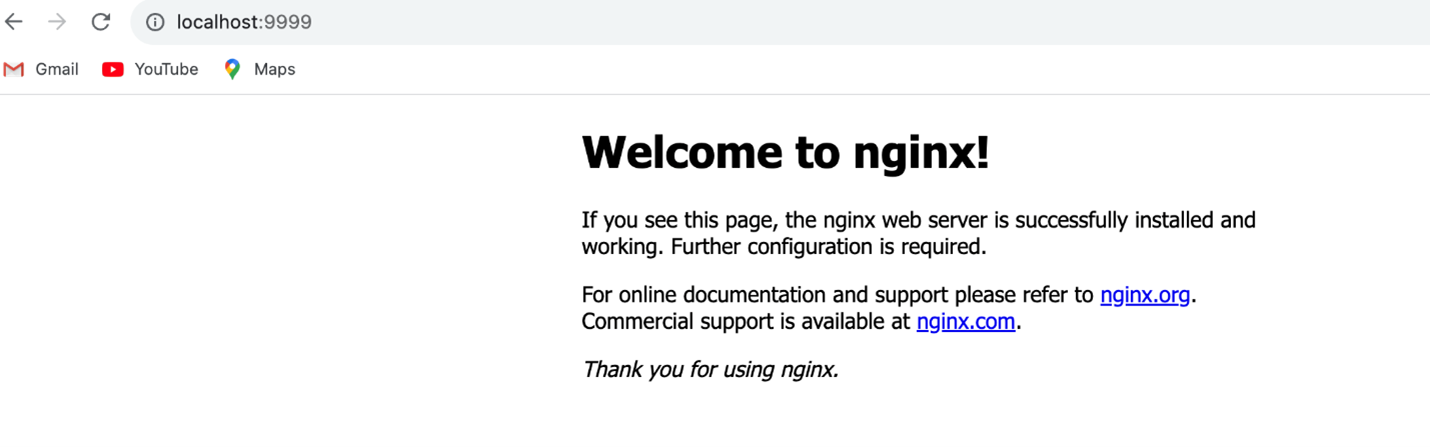

Upgrading the cluster will take 8-10 minutes and 25-30 minutes to do the node group and AWS EKS add-ons. Now, let's verify the NGINX application, which was already deployed. I verified the nginx application by port forwarding to 9999 port.

kubectl get po -n nginx NAME READY STATUS RESTARTS AGE nginx-deployment-595dff4fdb-rnccq 1/1 Running 0 9m9s kubectl port-forward -n nginx nginx-deployment-595dff4fdb-rnccq 9999:80 Forwarding from 127.0.0.1:9999 -> 80 Forwarding from [::1]:9999 -> 80 Handling connection for 9999 Handling connection for 9999

From above, the NGINX app is redeployed after the node group gets upgraded to 1.31 version. Now let's access the NGINX application by http://localhost:9999

Verify all other components after upgrading the EKS cluster to check whether they could include:

- Load Balancer Controller

- calico-node

- Cluster Autoscaler or Karpenter

- External Secrets Operator

- Kube State Metrics

- Metrics Server

- csi-secrets-store

- Keda (event driven autoscaler)

Conclusion

It's now much quicker to upgrade an EKS cluster, taking only 8 minutes to upgrade the control plane. I utilized Terraform to seamlessly upgrade both the EKS cluster and node group to version 1.31. This made the process significantly easier. Fortunately, there were no major issues during the upgrade, and all workloads ran without any problems.

My next blog will talk about migrating from EKS from 1.31 to 1.32. Stay tuned!