Docker is one popular technology amongst people working on containers. And why not, considering the advantages it offers when it comes to portability, isolation, standardization, and choices for programming frameworks? These features make Docker a perfect tool to play around for developers and help clients. In this blog, I am talking about how we at Opcito help our clients to set up a development environment using Docker and Docker Compose. In this particular case, the application used by the client has more than 20 microservices that continuously analyze real-time and historical data. The ever-growing data and analytical operations resulted in some challenges for the development and operations teams. It was difficult for a new developer to start any microservice because of the configurational changes needed on their local setup to start working on any of the services. Interdependency between multiple microservices and programs was making it difficult to test every configuration, which was raising questions on the overall application stability. And in a scenario where the customer wants to work with multiple services on the local system simultaneously, interdependence was creating a problem.

So, how do you solve this puzzle with Docker?

As a developer, you are always looking to minimize the differences between your development environment and production environment. So, in this particular case, Docker helps you to set up the local environment the same as the production environment, and that too without any hassle. With virtualization, you can deploy a Docker container with everything up and running within a few minutes. Containers have the ability to run in an isolated environment and within their own network without being dependent on any other service. You don’t need to install any other service or any dependency for your environment on your machine. All you need is Docker, e.g., want to run some Ruby script but don’t have Ruby installed, no worries, pull a Docker Image of Ruby and up the container. Development is too easy with Docker. Just build the code, deploy it into the server, push the image to the registry, and pull the same image in the production environment.

How to use Docker for a development environment?

The development with Docker is pretty much the same as development in any other environment. You make sure you have some working directory in which your code and other dependency resides and try to run the commands which are used to run your code inside the container. The only change in the above setup is instead of cloning each and every repo for every microservice, you can clone the repo inside the container so that the developer has only one responsibility - pull the Docker image, and the code from the container will be directly copied to volumes on host machine when the containers are up. This will reduce the efforts one has to put in to clone every repo in the initial stage to build Docker Image. So whenever a new developer wants to work on any microservice, he/she just needs to pull the latest Docker image from Docker AWS ECR and up the container. The developer can easily move to any other git branch or create his/her own branch and pull/push the code. This makes the developer’s work easy because there is nothing on the host's local machine; everything is inside the container. The developer just needs to update the code and test it inside the container.

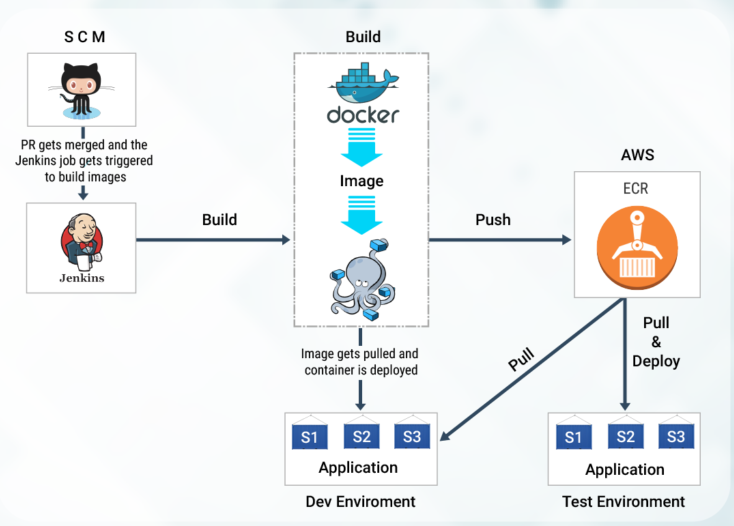

I have created a Continuous Integration Pipeline(CI) to build Docker images from the master branch. So if there is any code change in the master, the Jenkins job will be triggered, and it will build the new image and push the image to the AWS ECR registry. The developers can pull the latest image anytime they want.

Here is the sample content of the Dockerfile that directs clone data inside the container:

FROM ruby:2.3 RUN mkdir /root/.ssh/ ARG INSTALL_DIR=/home ARG API_PORT=3000 WORKDIR $INSTALL_DIR ADD src/key /root/.ssh/id_rsa RUN chmod 700 /root/.ssh/id_rsa RUN touch /root/.ssh/known_hosts RUN ssh-keyscan github.com >> /root/.ssh/known_hosts RUN git clone git@github.com:xyz.git # run bundle install RUN bundle install EXPOSE $API_PORT CMD ["rails", "server", "-b", "0.0.0.0"]

Sample of docker-compose file:

version: '2'

services:

backend:

Image: backend:latest

container_name: backend

env_file:

- conf.env

links:

- redis

- elasticsearch

- logstash

- kibana

volumes:

- "/home/api:/home/api"

ports:

- "3000:3000"

restart: always

The code will be copied to /home/api from the container when the container is up.

Now, as a result, you can:

- Build the dev environment with just a single command, “docker-compose up”

- Test at ease in the development environment

- Get started much faster than before because of the use of Docker containers instead of virtual machines

- Use Docker images instead of setting up a completely new environment for faster deployment

- Manage and scale containers easily

- Resolve the compatibility and maintainability issues with more reliability

- Control and manage the traffic flow in addition to maintaining and isolating the containers from each other for increased security

- Provide consistency across all environments.

So this was all about setting Dockerized environments for developers, where you can easily facilitate communication between containers. Docker makes it as easy as building the code, which simplifies the overall process. It allows you to run many applications on the same hardware configuration. It helps developers to deploy their code on the ready-made customized environment and allows scaling up and down multiple instances.