Data ingestion with Hadoop Yarn, Spark, and Kafka

Posted By

Aashish Chetwani

As technology is evolving, introducing newer and better solutions to ease our day-to-day hustle, a huge amount of data is generated from these different solutions in different formats like sensors, logs, and databases. The amount of data being generated is only going one way that is up which means increasing the requirement for storing this humongous amount of data. To fulfill this requirement, there are storage systems available that are more than capable enough to store this not only in an efficient manner but also cheaper than ever. This was about the data generated and how you can store it, but the question is - are you doing enough to analyze it in a way that will profit your business? If the answer is ‘Yes’ then this blog is for you and if the answer is ‘No’ then this blog is definitely for you. There are different technologies available that can elevate the value of the generated data, and one of them is “data ingestion with big data”.

So, what is data ingestion?

Data ingestion is a process that collects data from various data sources in an unstructured format and stores it somewhere to analyze that data. This data can be real-time or integrated in batches. Real-time data is ingested as soon it arrives, while the data in batches is ingested in some chunks at a periodical interval of time. To make this ingestion process work smoothly, we can use different tools at different layers which will help to build the data pipeline. So, what are these different layers, and which tools can we use?

| Data ingestion process layers | Tools used |

| Data Collection Layer | Apache Kafka, AWS Kinesis |

| Data Processing Layer | Apache Spark (Hadoop Yarn), Apache Samza |

| Data Storage Layer | HBase, Hive |

Let’s see what these layers actually stand for, and I will try to explain the actual data ingestion process with one scenario, where I will be using some of the above-mentioned tools for different layers:

Data collection layer: The data collection layer decides how the data is collected from resources to build the data pipeline. In the case which I will be elaborating, I am using Kafka as a data collection resource in the form of messages. Apache Kafka enables end-to-end communication with a robust queue that can handle high volumes of data with the distributed publish-subscribe messaging system. It is used for building a real-time application.

Use cases for Kafka:

1. Messaging

2. Stream Processing

3. Log Aggregation Solution

Data processing layer: In this layer, the main focus will be on how the data is getting processed, which helps to build a complete data pipeline. In this case, I am using Apache Spark. Apache Spark is an in-memory distributed data processing engine. The application performance is heavily dependent on resources such as executors, cores, and memory allocated. The resources for the application depend on the application characteristics, such as storage and computation. Spark runs in two different modes, viz. Standalone and Yarn.

- Spark in StandAlone mode - It means that all the resource management and job schedulings are taken care of by Spark itself.

- Spark in YARN - YARN is a cluster management technology, and Spark can run on Yarn in the same way as it runs on Mesos. Yarn is a resource manager introduced in MRV2, and combining it with Spark enables users with richer resource scheduling capabilities.

Data storage layer: In this layer, the primary focus is on how to store the data. This layer is mainly used to store real-time data, which is normally in a large amount and is already getting processed from the data processing layer. In this case, I am using HBase, which is an open source framework provided by Apache. HBase is a NoSQL database that runs on top of HDFS (Hadoop Distributed File System), which means less time for searching particular data. This speeds up the process of reading and writing data, and the multi-dimensional, distributed, and scalable nature makes it easy to integrate within Hadoop.

Use Case:

The data ingestion process that I will be explaining here is an actual solution that we at Opcito have implemented for one of our projects. There was a lot of data coming in the form of files. We ran some job processes on that data, like cleaning, mapping, writing, and storing it in the MySQL database. The problem with MySQL was that our system was generating a huge amount of data day-in-day-out. To store this data, we had already allotted more than 200 GB of disk space, and the ever-growing data meant we needed to increase our storage space which meant more expenses. In addition to this, a SQL database takes more time to search a particular query because it has to go through all the logs/files in a sequential manner to find and process that query. This increases the overall processing time and, as a result, affects the overall system performance.

Solution:

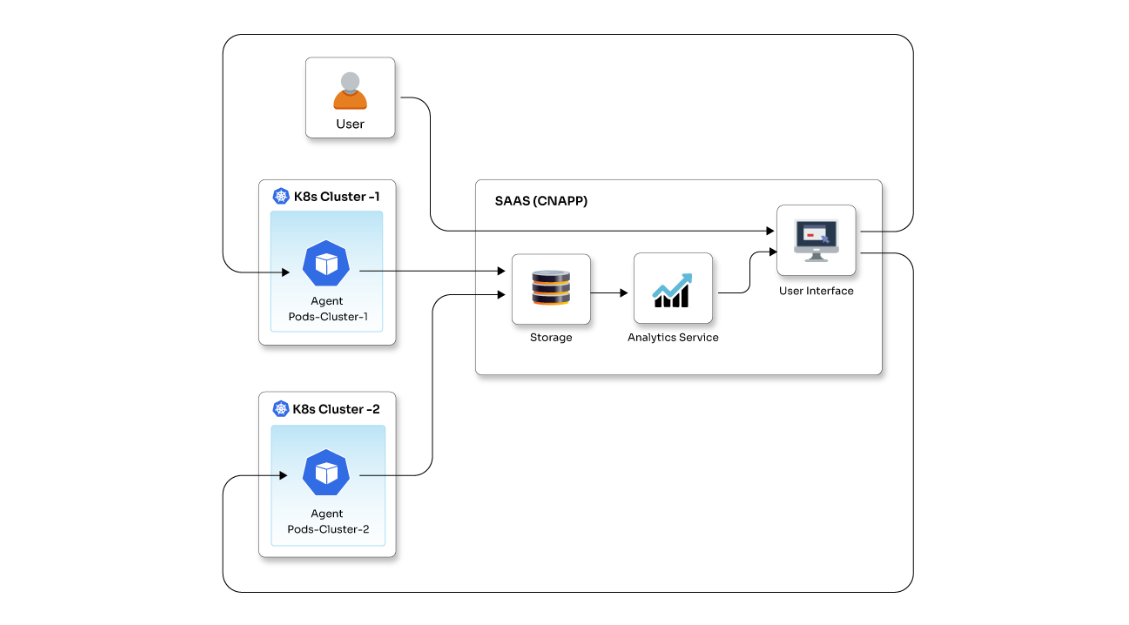

There could be multiple solutions to this problem, but we chose a solution that involved implementing a data pipeline using Hadoop Yarn, Kafka, Spark, HBase, and Elasticsearch. We have used the following architecture:

- Multi-node Hadoop with Yarn architecture for running spark streaming jobs:

We setup 3 node cluster (1 master and 2 worker nodes) with Hadoop Yarn to achieve high availability, and on the cluster, we are running multiple jobs of Apache Spark over Yarn. - Multi-node Kafka, which will be used for streaming:

Kafka is used for a distributed streaming platform that is used to build data pipelines. It is used for large-scale message processing, and to achieve HA for Kafka, it is set up on multi-node. - HBase, which is used for storing the data:

It helps to sparse the dataset.

The whole architecture helps to run the data pipeline and processes multiple spark streaming jobs with multiple messages in Kafka. These processed jobs are stored to HBase and the whole process is much quicker and cost-efficient as compared to the earlier setup.

Real-time data ingestion is very important for modern analytics platforms, and this setup might help you in processing your data in a much faster and more efficient manner. In the next blog, Onkar Kundargi will explain how to build a real-time data pipeline using Apache Spark, the actual multiple data pipeline setup using Kafka and Spark, how you can stream jobs in Python, Kafka settings, Spark optimization and standard data ingestion practices. Stay tuned!

Related Blogs