Security is one of the biggest concern nowadays, and organizations have started investing a considerable amount of time and money in it. 2018 has shown every one of us why it is of utmost importance to secure data and applications against any threat imposed. This is particularly the reason why one needs to pay more attention to securing applications, production, and data-sensitive environments like Elasticsearch or databases like MySQL, MongoDB.

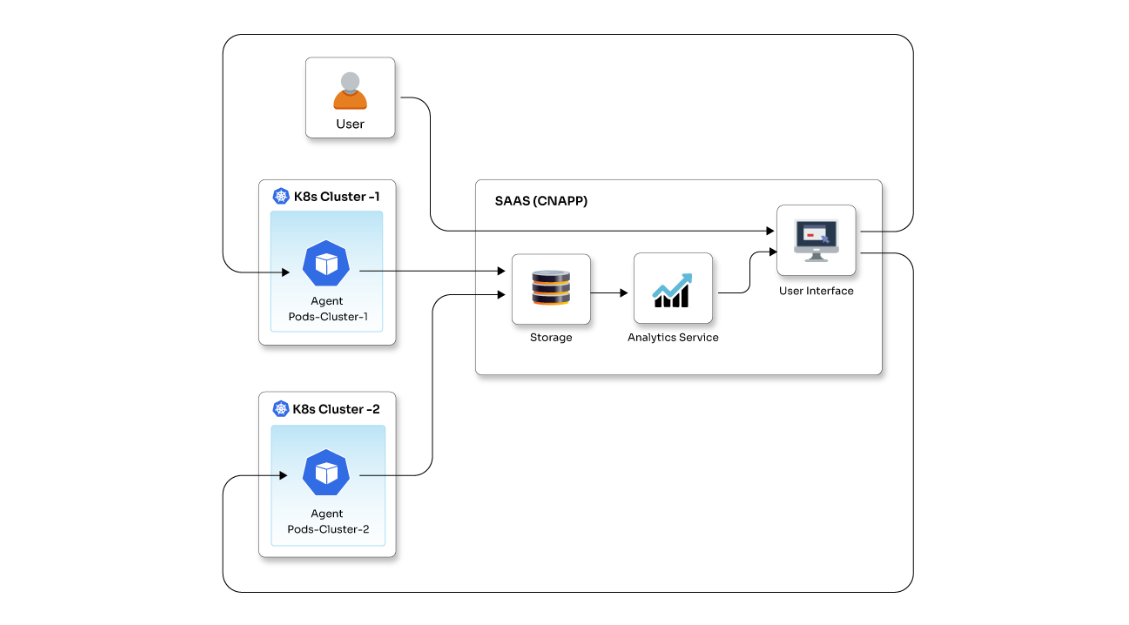

Kubernetes is turning into a go-to solution for container orchestration. Combining Kubernetes with clouds like AWS, Azure, or GCP to deploy applications and services can really augment their ability to serve users. But when it comes to data-sensitive services like Elasticsearch or databases, you need to take extra care. In this blog, I will be talking about securing an AWS-based K8s environment with data-sensitive services using OpenVPN. And how to configure Ingress controller with an internal load balancer to make sure these services are not accessible outside your AWS VPC network.

When it comes to controlling your services’ exposure, Kubernetes offers a lot of flexibility. You can configure your service objects to control who accesses what within the cluster or from outside the cluster. If you are using AWS, you can even request a load balanced IP address or hostname for your service. With a service object, you can easily connect services running in pods. But with Ingress, you get the flexibility to configure external access and to set up basic routing rules to different services or configure things like TLS. Different Ingress controllers can be used for different implementations, and the most popular one is the NGINX Ingress Controller. This blog will help you get a clear idea as to how you can secure Kubernetes’s data-sensitive services, how to create Ingress controller using an internal load balancer, and what should be the basic architecture for Data sensitive Services like Elasticsearch, MySQL, and MongoDB.

For this, set up any VPN server in your Kubernetes deployed VPC and expose all services using Ingress which is configured as an internal load balancer. I have used OpenVPN in this case. You can use any VPN of your choice. Set up an OpenVPN in your Kubernetes VPC. After you set up the OpenVPN and client configurations, you should be able to connect to an instance inside AWS VPC with private IP. This means you can now easily connect to any endpoint inside VPC with private IP communication. If you have Elasticsearch or MySQL or other databases deployed on deployment/StatefulSets inside Kubernetes, you can use Kubernetes service type load balancers, or you can use Ingress controller objects. If you are using a service-type load balancer, you can use annotations provided by Kubernetes to create this private type of load balancer by updating your service with the following annotations:

kind: Service apiVersion: v1 metadata: name: elasticsearch annotations: service.beta.kubernetes.io/aws-load-balancer-internal: 0.0.0.0/0 service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: '*' namespace: kube-system spec: type: LoadBalancer selector: app: elasticsearch ports: - name: esnode port: 9200

Adding this service.beta.kubernetes.io/aws-load-balancer-internal: 0.0.0.0/0 annotations will make sure an internal load balancer is created and the service is only accessed when you are connected to VPN so that the services are protected and secured.

Sometimes to avoid using multiple load balancers, you may prefer to use a single Ingress load balancer to expose HTTP and HTTPS routes from outside the cluster to services within the cluster. The traffic routing is controlled by rules defined on the Ingress resource.

Internet | [ Ingress ] --|-----|-- [ Services ]

Ingress can be configured to make services reachable via external URLs, load balance traffic, terminate SSL and offer name-based virtual hosting. Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer, though it may also configure your edge router or additional front-ends to help handle the traffic.

To Create a private internal load balancer-based Ingress controller within your Kubernetes cluster, follow the following steps:

wget https://raw.githubusercontent.com/sachingade20/k8slabs/master/ingress/ingress-nginx-deployment-private.yaml kubectl apply -f ingress-nginx-deployment-private.yaml

This is a general template to create Nginx-based load balancer which spins Nginx based Ingress controller and exposes that Nginx Ingress using a private load balancer.

Please note that this will not be accessible publicly as we are creating a private load balancer and a part of creating a private load-balancer for this setup is to expose Ingress-Nginx with internal AWS Load Balancer.

kind: Service

apiVersion: v1

metadata:

name: ingress-nginx

labels:

k8s-addon: ingress-nginx.addons.k8s.io

annotations:

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: '*'

service.beta.kubernetes.io/aws-load-balancer-internal: 0.0.0.0/0

namespace: kube-system

spec:

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

This is it! Using this architecture, you can make sure your data-sensitive services are not publicly accessible thereby securing the data associated with the services and Ingress controller will take care of the traffic routing, reducing the overall complexity.