Entering into the Docker world: A Hitch-hikers Guide to Clustering

Posted By

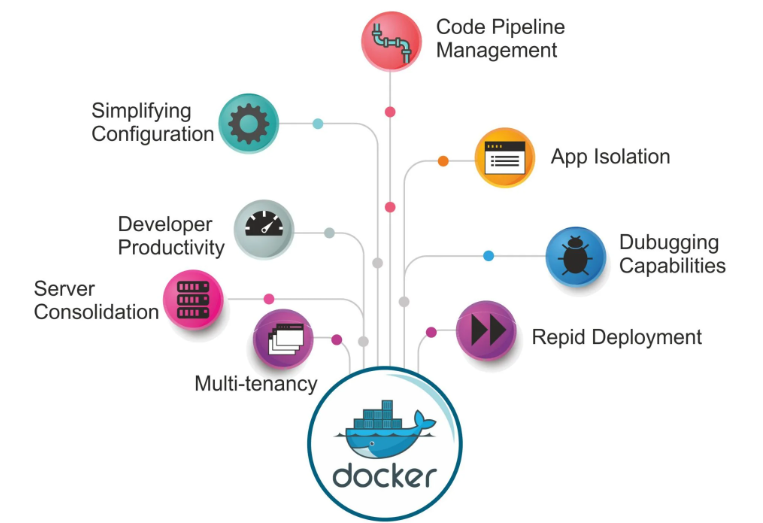

Chaitanya Deshpande

Docker, a relatively new container technology, is making waves the size of a Tsunami these days. Why? Simply because it makes the packaging and shipping of your apps much faster and uncomplicated. Along with the added benefit of being a leaner, lighter, OS-independent alternative to virtual machines, Docker makes it easy to run a number of apps on the same old servers. All this wrapped up in a pretty bow of open-source software, of course, Docker has enough reason to be the popular kid in school. Linux virtualization techniques like LXC (LinuX Containers) have always been around; however, due to the expertise and training required actually to deploy and maintain them, they never got much preference over Virtual Machines (VM). This article will explain the need for containerized applications and the roles of different tools and supporting frameworks for Docker containers.

What difference does Docker make?

The kaleidoscope of changes that transformed the architecture, infrastructure, and usage of applications since the beginning of the World Wide Web can be described as nothing less than radical. We drastically moved from thick to thin clients and all the way to mobile apps. In a similar way, we progressed from dedicated hosted servers to Virtual Private Servers (VPS) and finally towards cloud infrastructure. The simple rule of cause and effect would dictate that our applications will need a lot of flexibility and reliability to accommodate any further changes.

These changes also mean that an application must support high scaling and availability in order to be relevant and cutting-edge. Due to such needs, we need a complete ecosystem of infrastructure and resources that ensures the smooth deployment of our application. Now, to enable interaction between components of such an ecosystem, a lot of configurations are necessary. Furthermore, the applications change dynamically to keep up with market requirements and trends. Hence, there is a need for a continuous pipeline of different stages like development, QA, and Production. Now imagine the complexity of making those configurations on each stage of the development pipeline and migrating the application through these stages.

All this makes developing a sleek intuitive application seem like an unconquerable mountain. However, there does happen to be an easy solution to this complication. Docker, of course!

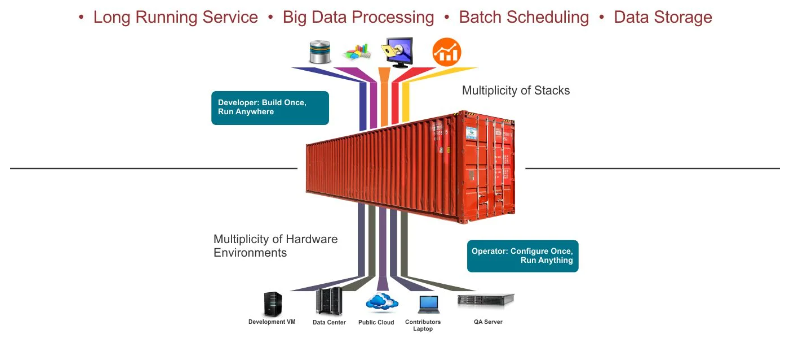

Akin to how shipping containers are used to ship goods and are standardized for multiple transport methods, in the software, we have the concept of Docker containers which enable any payload and its dependencies to be encapsulated as a lightweight, portable, self-sufficient container. This container can be manipulated using standard operations and run consistently on any hardware platform.

Following are some popular use cases for Docker:

In the DevOps world, there are two ways to look at docker: Developer view: Build Once, Run Anywhere, and Operations view: Configure Once, Run Anything.

How Docker can be used as a Cluster:

The ability to package, transfer and run application code across multiple environments is all good fun but adds the complexity of managing the applications as a whole. Moreover, as we increase the use of containers, new challenges come up in managing which containers run where, dealing with large numbers of containers and facilitating communication between containers across hosts, etc. This is where clustering tools come into the picture. Some fairly popular tools would be -

Fleet is a low-level and fairly simple orchestration layer that can be used as a base for running higher-level orchestration tools, such as Kubernetes or custom systems.

With Swarm, using the standard Docker interface and integrating it into existing workflows is very simple. However, it may make it difficult to support the more complex scheduling defined in the custom interfaces.

Kubernetes is an opinionated orchestration tool with service discovery and baked-in replication. It may require some re-designing of existing applications, but if used correctly, it will result in a fault-tolerant and scalable system.

Mesos is a low-level, battle-hardened scheduler that supports several frameworks for container orchestration including Marathon, Kubernetes, and Swarm. Kubernetes and Mesos are more developed and stable than Swarm. In terms of scale, only Mesos has been proven to support large-scale systems consisting of hundreds or thousands of nodes. However, when looking at small clusters of, say, less than a dozen nodes, Mesos may be an overly complex solution.

On its own, Mesos only provides the basic “kernel” layer of your cluster. It lets other applications request resources in the cluster to perform tasks but does nothing itself. Frameworks bridge the gap between the Mesos layer and your applications. They are higher-level abstractions that simplify the process of launching tasks on the cluster.

Consider the following cluster configuration; here, Mesos handles your application's resources at the kernel level. At the same time, as parts of the application are dockerized, they are portable and relatively hardware independent.

Following are some tools built on Mesos that are well-liked:

Long Running Services

- Aurora is a service scheduler that runs on top of Mesos, enabling you to run long-running services that take advantage of Mesos' scalability, fault tolerance, and resource isolation.

- Marathon is a private PaaS built on Mesos. It automatically handles hardware or software failures and ensures an app is "always on."

- Singularity is a scheduler (HTTP API and web interface) for running Mesos tasks: long-running processes, one-off tasks, and scheduled jobs.

- is a simple web application that provides a white-label "Megaupload" for storing and sharing files in S3.

Big Data Processing

- Cray Chapel is a productive parallel programming language. The Chapel Mesos scheduler lets you run Chapel programs on Mesos.

- Dpark is a Python clone of Spark, a MapReduce-like framework written in Python, running on Mesos.

- Exelixi is a distributed framework for running genetic algorithms at scale.

- Hadoop: Running Hadoop on Mesos distributes MapReduce jobs efficiently across an entire cluster.

- Hama is a distributed computing framework based on Bulk Synchronous Parallel computing techniques for massive scientific computations, e.g., matrix, graph, and network algorithms.

- MPI is a message-passing system designed to function on various parallel computers.

- Spark is a fast and general-purpose cluster computing system that makes writing parallel jobs easy.

- Storm is a distributed real-time computation system. Storm makes it easy to process unbounded streams of data reliably. It implements for real-time processing what Hadoop did for batch processing.

Batch Scheduling

- Chronos is a distributed job scheduler that supports complex job topologies. It can be used as a more fault-tolerant replacement for cron.

- Jenkins is a continuous integration server. Depending on the workload, the Mesos-Jenkins plugin allows it to launch workers on a Mesos cluster dynamically.

- JobServer is a distributed job scheduler and processor which allows developers to build custom batch-processing Tasklets using point-and-click web UI.

- Torque is a distributed resource manager controlling batch jobs and distributed compute nodes.

Data Storage

- Cassandra is a highly available distributed database. Linear scalability and proven fault tolerance on commodity hardware or cloud infrastructure make it the perfect platform for mission-critical data.

- ElasticSearch is a distributed search engine. Mesos makes it easy to run and scale.

- Hypertable is a high-performance, scalable, distributed storage and processing system for structured and unstructured data.

Conclusion

Nowadays, microservice architectural applications have multiple distributed systems dedicated to specific tasks. Together, where Mesos can run and manage Docker containers in addition to Marathon frameworks, Docker and Mesos can be viable solution to the micro-service scenario. Docker containers provide consistent, compact, and flexible packaging application builds. Delivering applications with Docker on Mesos promises a truly elastic, efficient, and consistent platform for delivering a range of applications on-premises or in the cloud. Hence, depending on how your application uses docker containers (refer to Docker use-cases above), an appropriate framework should be chosen to work with the Docker-Mesos configuration.

Contact our experts to know more about how Docker can help your application!

Related Blogs