Building a highly scalable, distributed deployment ecosystem using Kubernetes, Docker and Mesos.

Posted By

Mukti Chowkwale

Building a highly scalable, distributed deployment ecosystem using Kubernetes, Docker and Mesos.

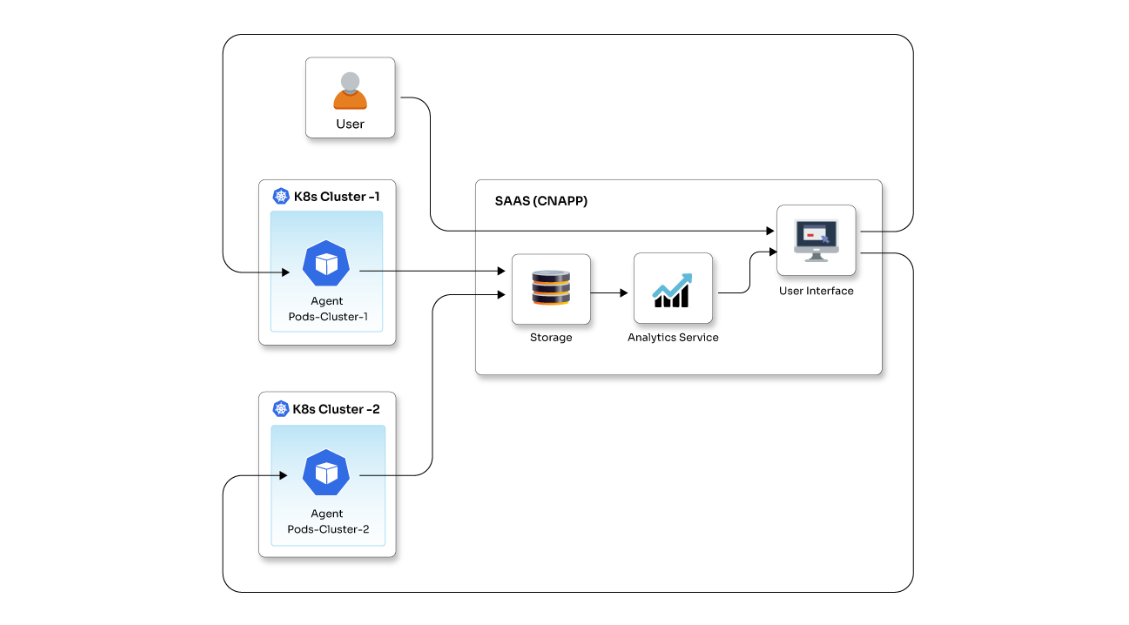

The customer is a next-generation recruitment analytics platform. The platform helps their customers to get detailed insights into the recruitment process, thus, eliminating the drudgery from the hiring process while keeping candidates engaged in the process as well.

Objective

The client approached Opcito to design and develop a deployment ecosystem that could handle applications' deployment, hyperscaling, and monitoring across a data center with 450+ physical servers and 1700+ processing cores, and 6 TB+ RAM.

The main objective was to create a highly scalable deployment ecosystem that can utilize all available resources seamlessly and easily manage large deployments with minimal effort.

The initial challenge was to design an ecosystem that performed hyperscaling of applications by isolating a large number of server resources across the data center. This feature would be implemented with distributed architecture and different scaling patterns to maintain consistency in resource utilization and time to delivery.

Approach

Our approach to this challenge can best be explained with a metaphor: If a Docker application is a Lego brick, Kubernetes would be like a kit for building the Millennium Falcon, and the Mesos cluster would be like a whole Star Wars universe made of Legos. After Understanding the Client’s needs and requirements, we came up with the following strategy:

Developed a customized platform for configuring and creating Docker containers using Kubernetes.

- Customized configurations of Kubernetes for managing containerized applications across multiple hosts. This involved providing basic mechanisms for deploying, maintaining, and scaling applications.

- Developed a hyper-scale controller for Docker, which scales to the demands of the deployments. Provided a customized integration bridge using Kubernetes and Docker API interfaces.

- Developed resource manager using Mesos Platform for distributed computing environments to provide resource isolation and management across a cluster of slave nodes.

- Integrated Mesos platform to join multiple physical resources into a single virtual resource. Mesos allows the scheduling of CPU and memory resources across the cluster, similar to how the Linux Kernel schedules local resources.

- Created/Configured deployment ecosystem with Mesos, Kubernetes, and Docker.

- Provided custom GUI for managing application deployment, scaling configuration, and container metadata updates using Kubernetes and Mesos CLI and API interfaces.

Related Blogs