Automated RSpec TestOps framework for parallel testing

Posted By

Shrikant Patil

Automated RSpec TestOps framework for parallel testing

TestOps is changing the way QA works and is increasing the importance of continuous testing. Opcito TestOps teams are revolutionizing traditional testing methodologies and are helping to deliver quality-assured products and services. Organizations these days prefer to execute automated and continuous testing to obtain continuous and immediate feedback, which will indicate business risks or product errors. This early pointing out of errors is resulting in faster development of quality-assured products and reducing the complexity of SDLC. One more thing which is reducing the complexity of SDLC is microservices. Microservices or monolith, which one is better, can be a different topic of discussion, but currently, a lot of us are switching to microservices, and automating TestOps can be problematic sometimes in such cases. In this blog, I will discuss a case where one of our clients had more than 20 such microservices. Each microservice has its own vital configuration files. The client was also using a new communication pattern between their ActiveRecord models that ran asynchronously through Sidekiq.

Before moving to Sidekiq, let’s first look at what Publish-Subscribe messaging is. In the case where multiple services or applications communicate with each other, there can be scenarios where multiple applications need to receive the same messages. Publish-Subscribe Messaging enables you to achieve that easily. A Topic is the core of the Publish-Subscribe messaging system. Multiple Publishers may send messages to a Topic, and all Subscribers to that Topic will receive all the messages sent to that Topic.

As shown in above figure, this model comes in handy when a group of applications wants to notify each other of a particular event or occurrence. The important thing to note is there can be multiple Senders and multiple Receivers. A messaging system lets you send messages between processes, applications, and servers. Applications should be able to connect to a system like that and transfer messages both ways means a Publisher (one that sends a message) can be a receiver/subscriber at the same time.

Now, let's look at Sidekiq. Sidekiq is a full-featured background processing framework for Ruby. Its aim is to offer simple integration with any modern Rails application and offer much higher performance than other existing solutions.

In this particular case, the client was using a microservice-based architecture instead of a traditional monolithic, which meant many services would be able to enqueue jobs to an isolated worker. Calling the web API of microservice0 will be responsible for calling the SomeWorker.perform_async function and putting an async task in the Sidekiq.

It’s not mandatory for the same application to know how to enqueue and consume a certain job. You can also put a job directly in a Redis server that only an isolated worker is watching. In this case, a combination of APIs and a Publish-Subscribe messaging system with test automation made perfect sense, which would cover all the test case scenarios thoroughly. Keep in mind that while running automated test scripts, all the jobs should be executed in a parallel manner because the application may get data from multiple third-party services, in this case, CRM applications.

In a CI/CD architecture, an automated testing framework like this may turn out to be a very useful thing because it will ensure verification of every new deployment in the CI/CD flow in an automated manner.

So, now you are looking at a test automation framework with Sidekiq and Publish-Subscribe Messaging, which will run automated tests for every deployment to ensure parallel testing in real-time. A test automation framework that verifies APIs and the business process initiated by the queue message, i.e., Message-driven business logic/process.

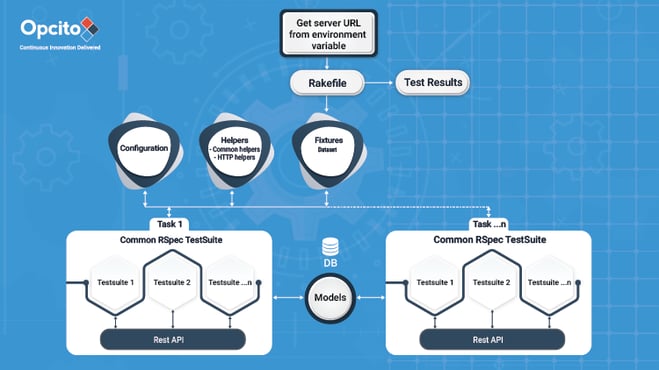

Following is the test automation framework solution and components designed by Opcito’s TestOps team to meet the aforementioned goals:

Queue API

Sidekiq enables the Message-driven platform, and the following code will automate this process using Sidekiq API:

require 'sidekiq' require 'sidekiq-status' queue_object = Sidekiq::Queue.new(" ") job_info = Sidekiq::Client.push( 'class' => ' ', 'queue' => ' ', 'args' => [1]) job_id = queue_object.find_job(job_info)

To test each application in isolation, Opcito’s TestOps experts decided to use Rspec. Rspec in this setup will help in arranging the shared files as per the name sequence. Using ‘Shared examples’ to execute multiple test suites in expected/required manner can be a good choice because ‘Shared examples’ lets you describe the behavior of classes or modules. You can declare a shared group's content to be stored and realized only in the context of another example group, which provides any context that the shared group needs to run. Shared examples do have a few limitations like, the files containing shared groups must be loaded before the files that use them. While there are conventions to handle this, RSpec does not do anything special (like autoload). Doing so would require a strict naming convention for files that would break existing suites.

For a system with multiple configurations as input to common test suites a typical framework for parallel execution looks like this:

Each configuration along with common RSpec testsuite together will form a task to be executed.

Common RSpec test suites

RSpec.shared_examples "Shared Test Suite 1" do |parameter| it "Test case with the given parameter if any" do expect(expected).to eq(actual) end end

testsuite_spec.rb

load 'spec_helper.rb' require 'yaml' file = ARGV[1].split(",")[1] RSpec.describe "#{file.split("/")[-1].delete('.yml')} Testsuite" do before() { # Get the file name, pass to shared helpers @file_path = ARGV[1].split(",")[1] @filename = "spec/#{@file_path}" @file = YAML::load_file(@filename) } it_should_behave_like "Shared Test Suite 1",@file do end it_should_behave_like "Shared Test Suite 2" do end end

Rakefile

require 'rspec/core/rake_task' FILES = Dir["spec/fixtures/*.yml"] all_tasks = [] FILES.each do |file_name| task_name = file_name.split("/")[2].delete(".yml") create_task = RSpec::Core::RakeTask.new(task_name.to_sym) create_task.pattern ="spec/testsuite_spec.rb, #{file_name.split("spec/")[1]}" create_task.verbose = false all_tasks << create_task.name create_task.rspec_opts = "--format h > reports/#{task_name}.html" end multitask :all => all_tasks

Fixtures

The fixtures folder contains multiple configuration files. The pipeline reads the count of these files from that folder and then creates tasks for each of these files. That task contains the common test suite. The common testsuite is nothing but a set of different RSpec (application-specific) test suites. To align the test suites in a common test suite as per the requirement, you can use a shared example methodology. The rake file configuration includes the configuration for multi-tasking, which will help in the parallel execution of tasks.

Helpers

A helper makes viewing functions a lot easier, e.g., if you're always iterating over a list of widgets to display their price, put it into a helper (along with a partial for the actual display), or if you have a piece of RJS that you don't want cluttering the view, put it into a helper.

Models

A model has all your code that relates to the data ( entities that make up your site, e.g., Users, Posts, Accounts, Friends, etc.). If the code wants to save, update, or summarise the data related to your entities, you can do it with Models.

Configuration

.yaml files are mainly used in programming languages to maintain project configuration data, database config or project settings, etc. Each parameter in configuration files is stored as a pair of strings in a key-value pair format, where each key is on one line. The object of type Properties can help you read properties from files.

Results

We can create an HTML test result file for each Task as per the task name using the RSpec tool.

In typical testing of the Asynchronous Queue Jobs, the testing framework is divided into two parts - first, testing the queue and its job; second, testing the business logic executed by the job. Testing the queue can be done by using the Sidekiq testing framework (e.g., pushing to a queue or subscribing to another microservice). Testing the business logic executed by the job helps in separating the business logic from infrastructure code. Having an end-to-end integration test helps in ensuring proper communication between components. With the help of such tests, you can execute simple business scenarios that touch all the components and block a queue waiting for a response or poll the database to check for the result. This gives the stakeholders confidence in the configuration.

As the focus of test automation is shifting from GUI to API, here are some best practices that you can follow for API Testing:

- Make sure the basic request response is working consistently.

- Group your test cases by test category.

- Create test cases for all possible API input combinations for complete Test coverage.

- Perform a series of load tests to add stress to the system.

- Mention the parameters selection criteria in the test case itself.

- To ensure testers follow the timelines, prioritize API function calls.

- Make sure your documentation is as per the standards to bring everyone on the same wavelength.

Unit testing is never enough in any SDLC. You can catch potential bugs or regressions with unit testing, but still, you will need an overarching testing strategy that involves integration testing and non-functional testing and the TestOps team, which will try to break the software for thorough quality analysis. Automation will help you ease through all these processes. An automated integration test verifies the communication paths and interactions between components to detect interface defects. Automated Queue testing scripts/jobs will help in further deep-diving and validating the sanity of the inter-communication(messages) between the microservices. Automated UI testing will help in the end-to-end testing of various business use cases, which will assure the stakeholders and increase the application reliability due to the rigorous testing that is done using the automation framework.

Identification of threats or issues in the early stages of development helps in reducing the wastage of human and financial resources. Automated testing processes as a part of your CI/CD Pipeline will determine the overall health of the builds under test and will thus speed up the deployment to the production process.

Automated API testing is a must-have thing for any kind of software development and an immensely efficient and valuable asset for functional, load, regression, and integration testing. It will empower your TestOps and QA teams to have their say in the business logic and improve the functionality of the system. They are the ones who can think from a stability point of view and ensure stable operation during production and long afterward. In the case of API calls and third-party tools’ integration, API automation will help in facilitating integration and regression testing in an Agile environment. In the case of complex solutions, manual validation would require an unreasonably large amount of time. Automation saves you from all these hassles by helping the TestOps team in detecting any loopholes in the overall system rather than an isolated element in much earlier stages which will help in applying the fixes in no time. All you need is a team that can make the process effortless and makes it so you have Team Opcito.