Adding value to your TestOps with pytest

Posted By

Ashwini Bodke

The pytest framework is one of the best opensource test frameworks that allows you to write test cases using Python. With pytest, you can test almost anything - databases, APIs, even a UI. However, pytest is mainly used to write tests for APIs, and it is popular amongst testers because of its simplicity, scalability, and pythonic nature. You can parallelly run specific tests or a subset of test cases. Test cases are written as functions, and test assertion failures are reported with actual values. Plugins can be used to add code coverage, pretty reports, and parallel execution. And the best part is, pytest can also be integrated with other frameworks like Django and Flask.

Since pytest is widely popular amongst the QA community, let’s see what standard practices you should follow while writing any test case in pytest. I will elaborate on some of these practices using a few examples. Let’s start from the basics first - writing a test case.

The first test

Writing the first test case is always a big thing for most of us. So, how do you go about it? In pytest, it will search for test functions named test_* in modules named test_*.py. Interestingly, pytest doesn’t need an__init__.py file in any test directory.

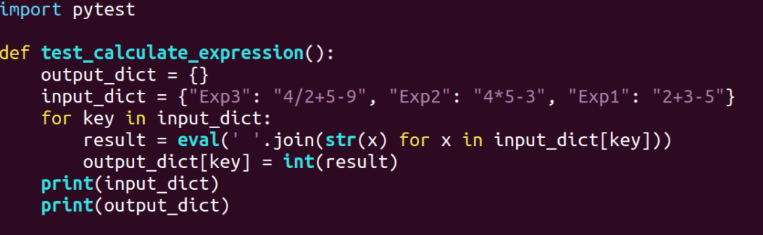

Example -

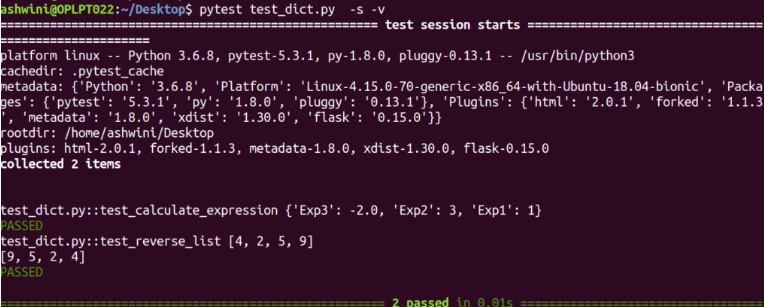

Now, if you run pytest, you will get an output like this -

In the above command, -s -v parameters are used to display the test case name along with pass and fail status in detail.

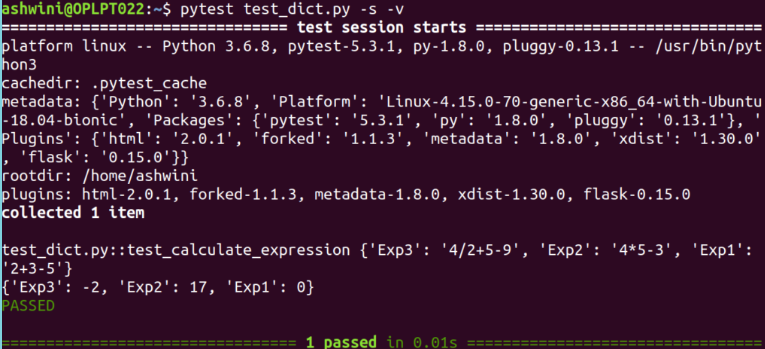

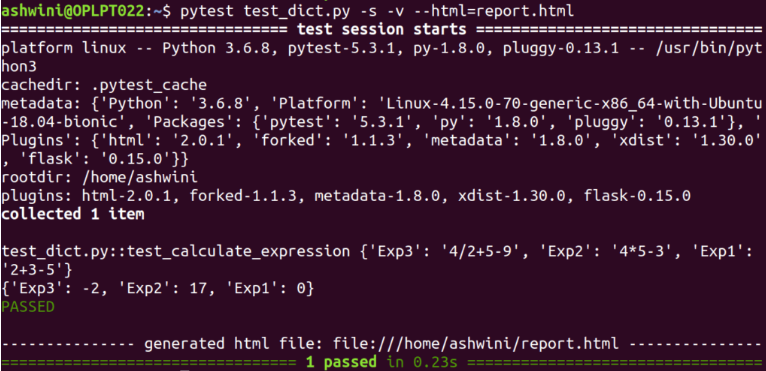

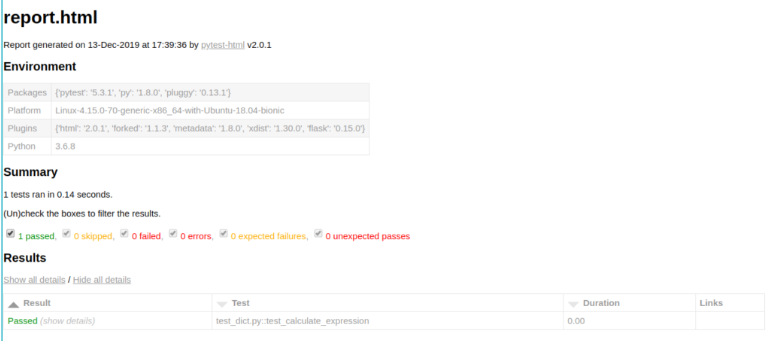

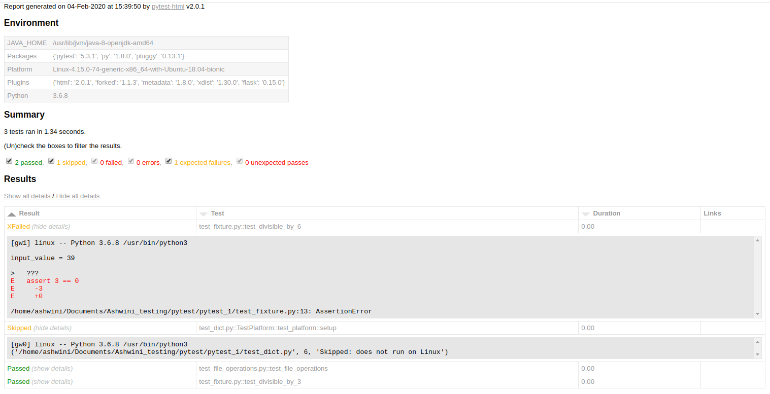

Generate HTML report

Sometimes, when pytest runs at the command line, it prints a futile text. In such scenarios, visual reports are a much better way to understand the test result information. Adding the pytest-html plugin to your test project enables you to print better HTML reports with one simple command-line option.

The report will look like this -

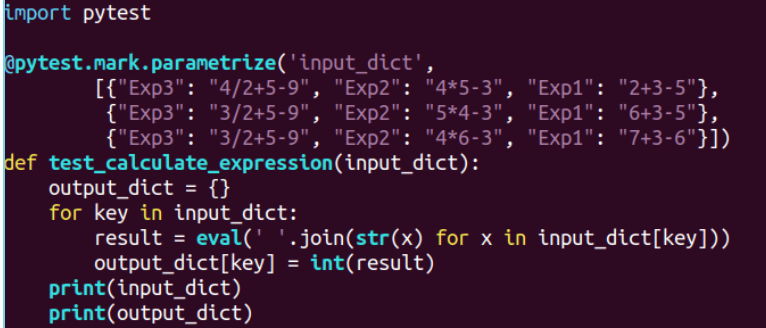

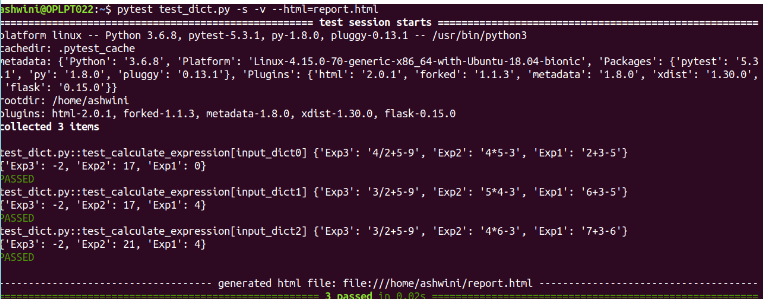

Parameterized test case

Pytest has a decorator to run the same test procedure with multiple inputs. The @pytest.mark.parametrize decorator will substitute tuples of inputs for test function arguments and run the test function once per input tuple. If you are looking to perform data-driven testing, Parameterized testing is a great way.

Example -

The result will look like this -

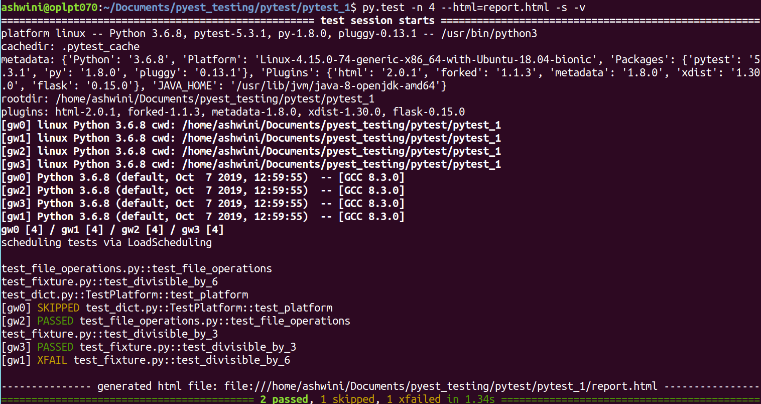

Parallel test execution with pytest

It’s common in any development practice to have thousands of tests with an average of one test per minute. Consider an example where you have 100 tests that would take about 1 hour and 40 minutes to run. By this rate, for 1000 tests, it would take around 16 hours to run. That is a lot of time if you are looking at it from a CI/CD pipeline’s perspective.

The only way to achieve truly continuous testing is to run them parallelly via the pytest plugin. In pytest, you can scale up the test thread count with pytest-xdist and if you want to scale out you can distribute the test execution to remote machines.

In the above output, 4 workers are created with names [gw0], [gw1], [gw2], [gw3]. It will create 4 threads on which test cases will run.

-n option is to run tests by using multiple workers.

The log file will look like this -

Although the time difference looks negligible when there are only a few tests to run, imagine the time taken when you have a large test suite.

Run multiple tests from a specific file and multiple files

Suppose you have multiple files, say test_example1.py, test_example2.py. To run all the tests from all the files in the folder and subfolders, you need to only run the pytest command py.test. This will run all filenames starting with test_ or ending with _test in that folder and subfolders under the particular folder.

Run tests by substring matching

Suppose you have test files where you have to run all the test cases having the “calculate” substring in its test case name. Then you just need to run the following command -

py.test -k calculate

It will run all the test cases that contain the substring “calculate”.

Here -k is used to represent the substring to match.

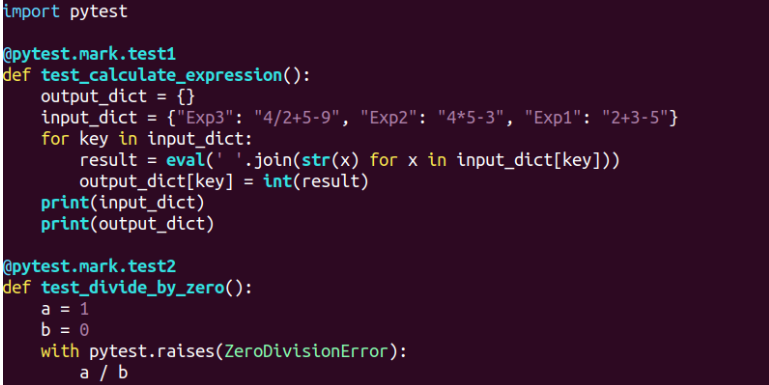

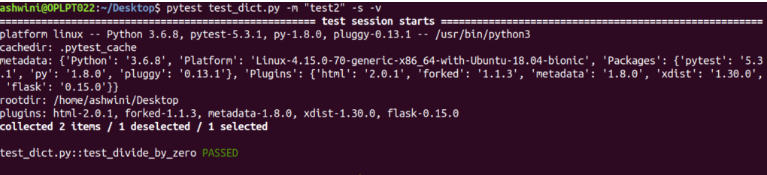

Run tests by markers

Pytest allows you to set various tags to the tests using pytest markers @pytest.mark. To use markers in the test file, you need to import pytest library in the test files, and then you can apply different marker names to the test methods and run specific tests based on these marker names. You can define the markers on every test name by using

<xmp>@pytest.mark.<name>.</xmp>

Example -

When you run any test with a specific tag the result will look like this -

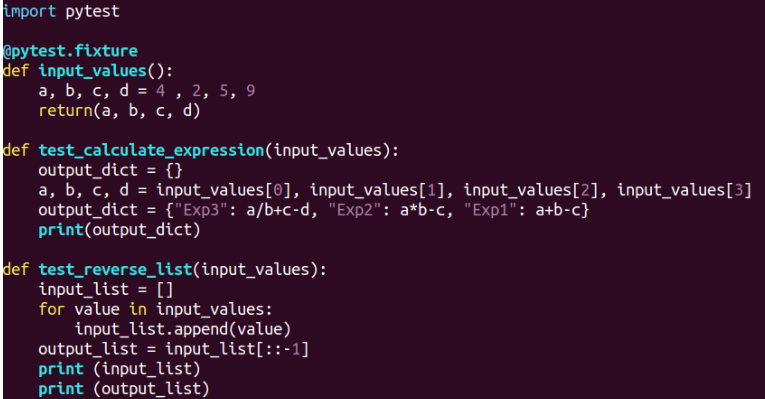

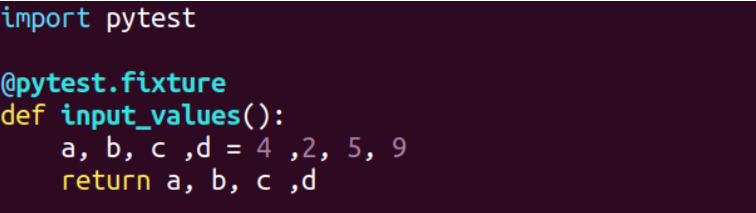

pytest fixtures

Fixtures are used when you want to run code before every test method, and instead of repeating the same code in every test, you define fixtures. Usually, a method is marked as a fixture by marking it as @pytest.fixture. A test method can use a fixture by mentioning the fixture name as an input parameter.

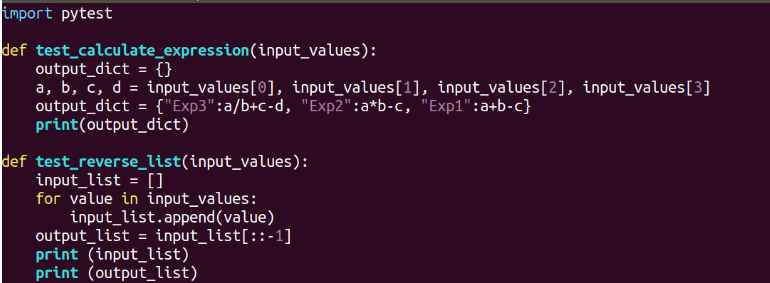

Example -

The result will look like this -

Once you define a fixture function inside a test file then it only has a scope within the test file, thus prohibiting you to use it in another test file. To make a fixture available to multiple test files, you have to define the fixture function in a file called conftest.py.

In the above test file, the fixtures have been written that you can move to the conftest.py file so that tests from multiple test modules in the directory can access the fixture function.

Here’s how the conftest.py file will look like -

This will be your test file -

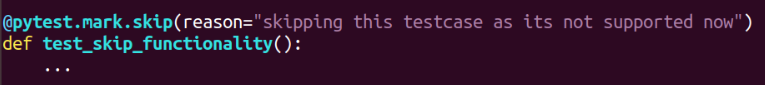

Xfail/Skip tests

There might be situations where you don't want to execute a test, or a test case is not relevant to a particular period. In such situations, you have the option to Xfail the test or skip the tests. The Xfailed test will be executed, but it won’t be counted as a part of failed or passed tests. There will be no traceback displayed if that test fails. You can Xfail tests using @pytest.mark.Xfail. You can apply Xfail/Skip when the test for a feature is not implemented yet, or a bug is not fixed. You can skip a test using @pytest.mark.skip and it will not be executed. The simplest way to skip a test function is to mark it with the skip decorator that can be passed as an optional reason.

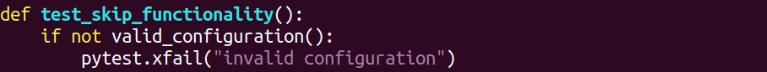

Alternatively, it is also possible to skip imperatively during test execution or set up by calling the pytest.skip(reason) function:

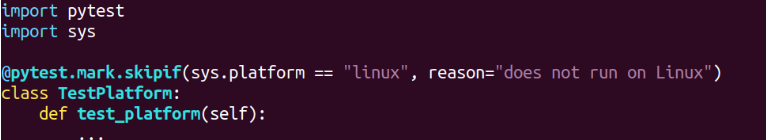

Skipif

If you wish to skip something conditionally then you can use the Skipif pytest marker. You can also use this marker at the class level.

Example -

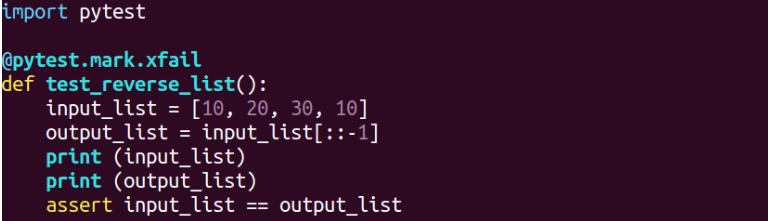

Xfail

If you are expecting a test to fail, use Xfail marker to specify that. In this case, the test will run, but traceback won’t be reported if it fails. The terminal reporting will display it in the “expected to fail” (XFAIL) or “unexpectedly passing” (XPASS) sections.

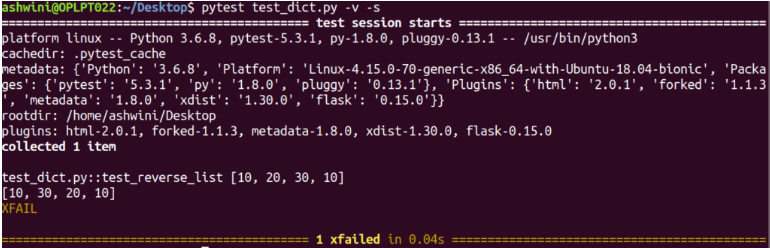

The result will look like this -

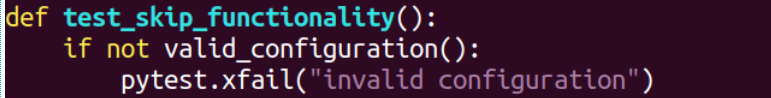

Alternatively, there is a provision to mark a test as XFAIL from within a test or setup function.

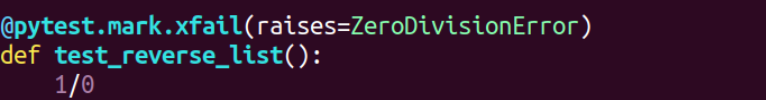

You can specify a single exception, or a tuple of exceptions, in the raises argument to know the specifics of the test failure.

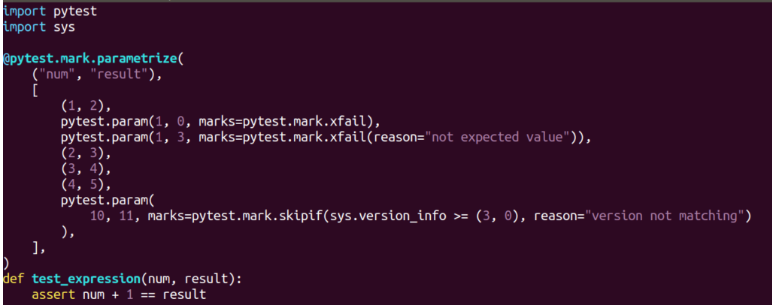

Skip/Xfail with parametrize

While using parametrize you can apply markers like skip and Xfail to the individual test instances.

Example -

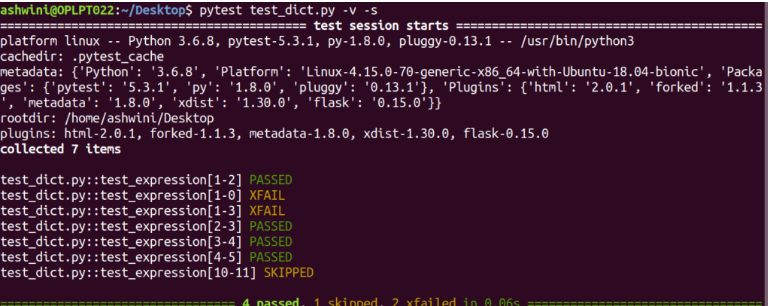

The result will look like this -

With pytest framework, you can easily write functional test cases for applications and libraries irrespective of the level of complexity. You can use Selenium WebDriver with pytest as a part of web testing. Pytest features like fixture, parameterize, Xfail, skip, etc. make it more powerful. Pytest allows you to run a subset of the entire test suite making the overall test execution and debugging faster.

This was just a quick overview of some of the standard practices and fixtures in pytest that you could use to elevate your TestOps practices. Feel free to comment your thoughts in the comment section below. Till the next time, happy testing.

Related Blogs